As COVID-19 circulated the globe in March, reports emerged of another new, viral threat: “Zoom-bombing.”

The term derives from photo-bombing, which is defined as appearing “behind or in front of someone when their photograph is being taken, usually doing something silly as a joke.” However, for many Zoom online meeting hosts, participants and computing infrastructure managers, Zoom-bombing was no joke.

The cancellation of in-person school and university classes prompted a stock market surge for Zoom, along with considerable scrutiny of the video conferencing company’s startlingly weak privacy and security protocols.

READ MORE: Zoom fatigue? How to tell loved ones you don’t want to video chat

And yet, it has been the Zoom-bomb — the interruption of Zoom meetings — that has led to considerable news media attention since mid-March. While the company sought to communicate best practices to prevent Zoom-bombing, it continued to proliferate, leading users and shareholders alike to organize an online petition and threaten class-action lawsuits.

Zoom-bombing gradually began to subside after the FBI issued a statement on March 30, characterizing it as a cybercrime that should be reported to law enforcement agencies.

Disrupting targets

Given the fear, disruption and anxiety produced by the COVID-19 pandemic, the intentional disruption of online work and education raises some obvious questions.

First, what would motivate someone to cause such a disruption during an unprecedented global pandemic? Is this the work of isolated individuals or a coherent, co-ordinated campaign, targeting democratic institutions and processes? What is the goal of such disruptions, and who has been targeted?

Our team of researchers at Ryerson University’s Infoscape Research Lab set out to answer these questions by studying three popular social media platforms: Twitter, Reddit and YouTube. We anticipated that Zoom-bombing would take on different characteristics on each of these platforms, since each is designed to facilitate a different form of communication.

At the outset of our research, we employed digital humanities methods to track the language associated with Zoom-bombing on each of the platforms. Tracking keywords enabled our research to cast a wide net and collect as much user-generated content as possible related to Zoom-bombing on the three platforms.

WATCH BELOW: (From April 15, 2020) Staying safe and secure on Zoom

Broader concerns

Get daily National news

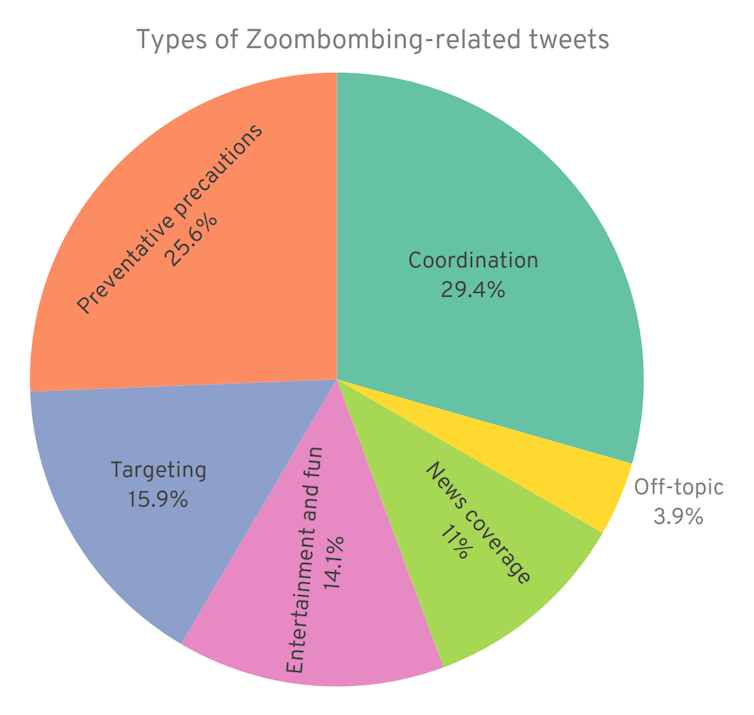

From April 3-28, 2020, our study analyzed a random sample of 1,000 tweets that contained Zoom-bomb related terms. Over half of the tweets sought to organize and co-ordinate Zoom-bombing, often by sharing Zoom access codes, or posted information and advice on how to avoid such online disruptions.

Tweets often reflected broader social concerns over continuing work during COVID-19, the security of online meetings and the emerging challenges to online learning. A significant percentage of tweets (15.9 per cent) specifically named online targets, including Holocaust memorials, Asian community groups, Alcoholics Anonymous meetings and various religious services.

Some tweets (9.4 per cent) feature students sharing Zoom access ID codes for their own classes to target specific teachers. We found that Twitter users also used the occasion to post and comment on humorous content related to the Zoom-bombing phenomenon.

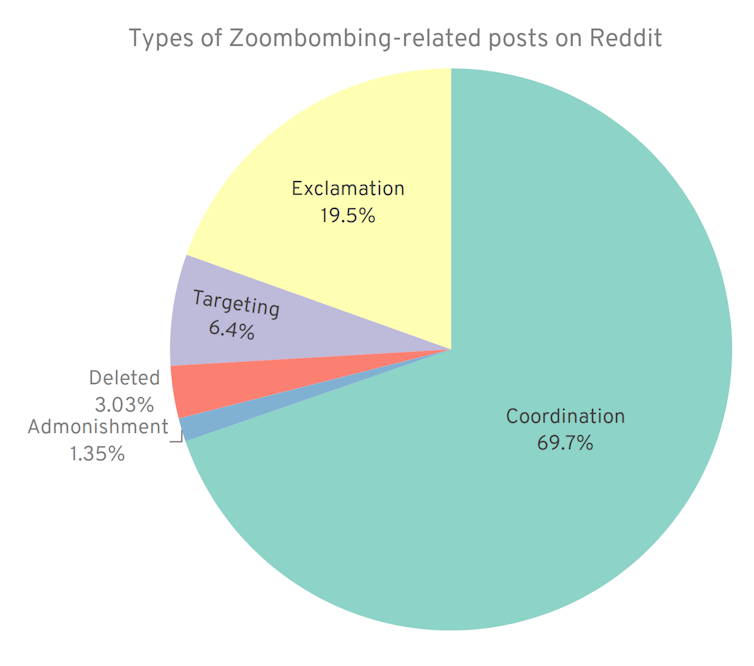

In contrast, the prevalence of popular keywords on Reddit such as “zoompranks” and “OnlineClassRaid” suggested that the platform was largely being used as a staging ground for the Zoom-bombing of online classes and meetings. An analysis of 300 random posts from Zoom-bombing subreddits (online discussion groups dedicated to specific topics) confirmed our suspicions.

Nearly 70 per cent of all posts served to co-ordinate Zoom-bombing, either by sharing practical advice, Zoom meeting ID access codes or other logistical information. If we include posts that offer short affective outbursts or reactions to Zoom-bombing, then this figure approaches 90 per cent of all posts.

By contrast, a mere 1.3 per cent of posts admonished those in the subreddits for launching such attacks. While the vast majority of Reddit posts sought to facilitate Zoom-bombing, we also found a small percentage (6.4 per cent) that targeted particular groups, including an LGBTQ2 social meeting and a breastfeeding support class.

Of all the platforms we studied, YouTube offered the most jarring view of Zoom-bombing. This highlighted the popular and controversial role that the platform played in offering videos of the “funniest moments” in Zoom-bombing.

Following a similar method to our study of Reddit and Twitter, we analyzed a sample of 60 of the most viewed videos on YouTube. The large majority of these videos (85 per cent) were roughly 10-minute compilations of multiple clips of Zoom-bombs, many of which were initially shared on TikTok, a popular video-sharing platform.

The remaining videos, also compilations, include commentaries throughout by YouTube “influencers,” individuals with large numbers of online followers. While the viewer sees these micro-celebrities laugh throughout the video, Zoom-bomb disruptions are hard to watch: many Zoom meeting hosts and participants were confused, irritated or shocked by the actions and words of Zoom-bombers. Teachers of smaller children looked traumatized.

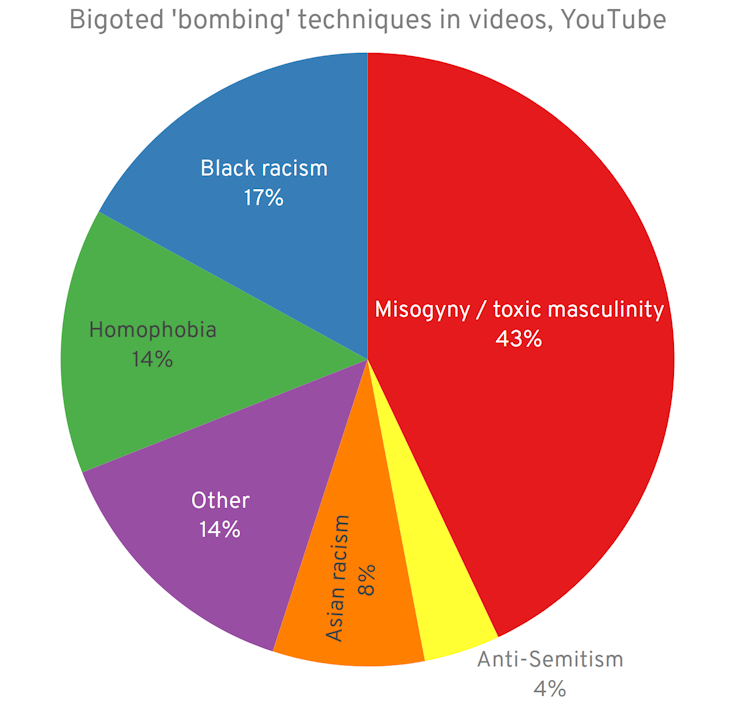

Seventy-two per cent of our sample videos included mob-like interruptions, with multiple voices, screams, profanities and other sounds occurring at the same time. Most troubling, however, was the objectionable language and images used by Zoom-bombers.

While we found that a few Zoom-bombs included light-hearted pranks that bemused some Zoom meeting participants, nearly 87 per cent of YouTube compilations also contained racist, misogynist, homophobic and other objectionable content. Much of this content was directed against female teachers in Zoom classroom meetings.

Viral threats

We can draw a number of conclusions based on our research to date.

The insecurity of Zoom and the quick transition to online learning created an insecure environment, ripe for disruption and abuse by computer-savvy, overwhelmingly male high school students.

Zoom-bombing should remind us of the technological divide between the highly skilled and creative generations that live much of their lives online and older generations that struggle with platform settings, protocols and practices.

But such a generational divide should not mask the most troubling aspects of Zoom-bombing, the intentional disruption of important work and the abusive targeting of women and people of colour.

Such toxic practices of course pre-exist internet videoconferencing and will, unfortunately, persist long after the end of Zoom-bombing. We may all be experiencing the pandemic together, but Zoom-bombing has also reminded us that viral threats require social solutions.Greg Elmer, Professor, Professional Communication, Ryerson University; Anthony Glyn Burton, Master’s student, Joseph-Armand Bombardier SSHRC Scholar, Ryerson University, and Stephen J. Neville, PhD Student of Communication & Culture, York University, Canada

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Comments