Alberta researchers have found a way to catch potential early signs of Alzheimer’s disease.

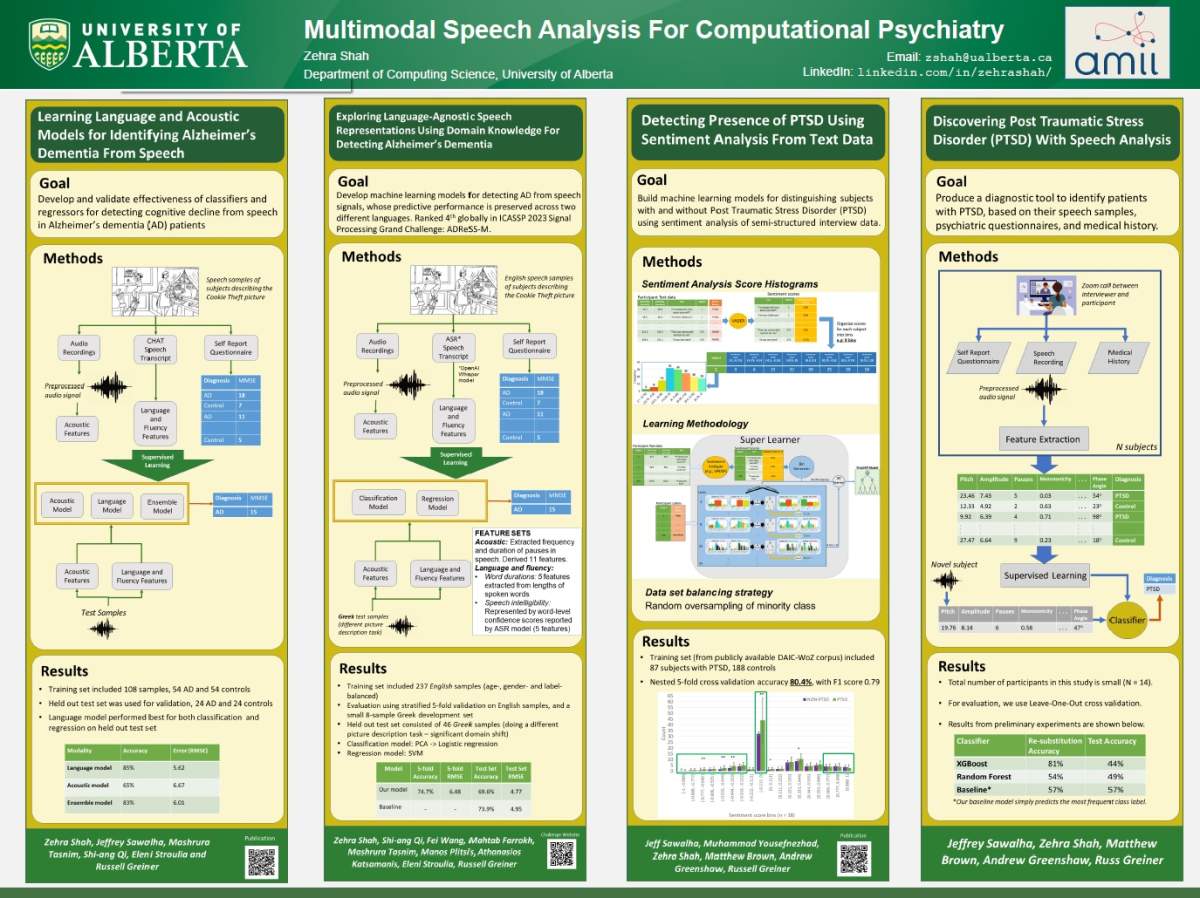

They’re using a machine-learning model to detect audio cues — certain speech patterns that are linked to a diagnosis of Alzheimer’s or other forms of dementia.

“We’re interested in looking at speech in particular as a window into the human mind, so to speak,” said Zehra Shah, a University of Alberta graduate student and lead researcher.

“The idea here is we want to look at speech as a potential biomarker in order to be able to identify patterns that might help us diagnose and monitor psychiatric disorders such as Alzheimer’s dementia.”

The technology listens for three features: pauses in speech, word length or complexity, and speech intelligibility.

“For dementia patients, because there might be a need for more recall, they tend to forget words and they need a certain amount of time to recall those words, so there will be longer pauses,” Shah explained.

“A longer word, we assume, will have a higher degree of speech complexity rather than shorter words like ‘uh’ and ‘the.’

“Longer word duration … is a proxy for speech complexity,” she added. “Again, the hypothesis here is that dementia patients would have lower speech complexity compared to healthy controls.”

Researchers used 237 English-speaking individuals and 46 Greek-speaking individuals — half were labeled as dementia patients and half were a control population.

Get weekly health news

The model was able to distinguish Alzheimer’s patients from healthy controls with 70-75 per cent accuracy.

“It’s like a support tool for clinical diagnosis,” Shah said. “But we don’t foresee this tool to be a diagnostic tool in and of itself. It would need a human in the loop.

“It’s the first point, triaging, screening for potentially at-risk populations to see where they are at this point in time and possibly flagging any higher-risk individuals in this category and asking them to look into further screening.”

It’s not intended to replace clinical diagnosis. But Shah hopes the technology will eventually lead to widespread easy and regular access to an early detection tool, available to anyone with a smartphone.

The project is still in its early stages but Shah thinks it has great potential and could be formatted for an app.

“Which would not be monitoring continuously but you can open the app and speak into it. For example, you could have the app ask you on a daily basis: ‘How is your day going?’ and the person just responds in a spontaneous manner and the app could, in the background, potentially look at features in your speech to see how it’s changed.”

There are also opportunities for this method to increase access to health care.

“We find that speech as a biomarker is really interesting, looking at it from a remote mental-health-care perspective,” Shah said. “We can think about the potential of using this kind of technology for tele-health, remote mental health monitoring.”

And, it could be widely used in any language. Since it’s not listening to specific words — but rather pauses and word length.

“We are looking at speech samples without looking at the actual language content since we’re looking at features that would work across different languages and so we’re not really focused on the word content but we’re looking at other features,” Shah said.

“We’re looking at a language-agnostic tool that can do the same thing. We would like for this technology to be utilized across a slew of different languages, so we’re not restricted to the English language any longer and so that’s where the potential lies for scalability as well.”

The machine-learning model was described in a paper, “Exploring Language-Agnostic Speech Representations Using Domain Knowledge for Detecting Alzheimer’s Dementia.”

The research team ranked first in North America and fourth globally in the ICASSP 2023 Signal Processing Grand Challenge.

Comments

Want to discuss? Please read our Commenting Policy first.