YouTube remembers what it can about us – sometimes a little too much.

You have your YouTube and I have mine. They’re tailored to us, for better or worse – YouTube’s algorithms are set up to figure out what we want to watch, give it to us and have us watch ads along the way. But what does a brand-new account see?

We started with a new, unused Google account with no YouTube viewing history, and entered a few very basic searches.

‘Moon landing’

Our first search was ‘moon landing,’ which you’d think would be innocent enough. However, four out of the first six videos were promoting theories that the moon landings never happened:

‘The Pope’

The Pope what? Well, nothing in particular, for now.

We have five videos. One is from a mainstream source, three promote conspiracy theories and one which calls the Catholic church, in its entirety, ‘a wolf in sheep’s clothing.’

Is the Pope …

Next, we let YouTube autocomplete the search ‘Is the Pope’. The results were foreseeable:

Which did we choose? Well, none – we just left it. Here’s what that looked like:

Get weekly health news

‘Vaccination’

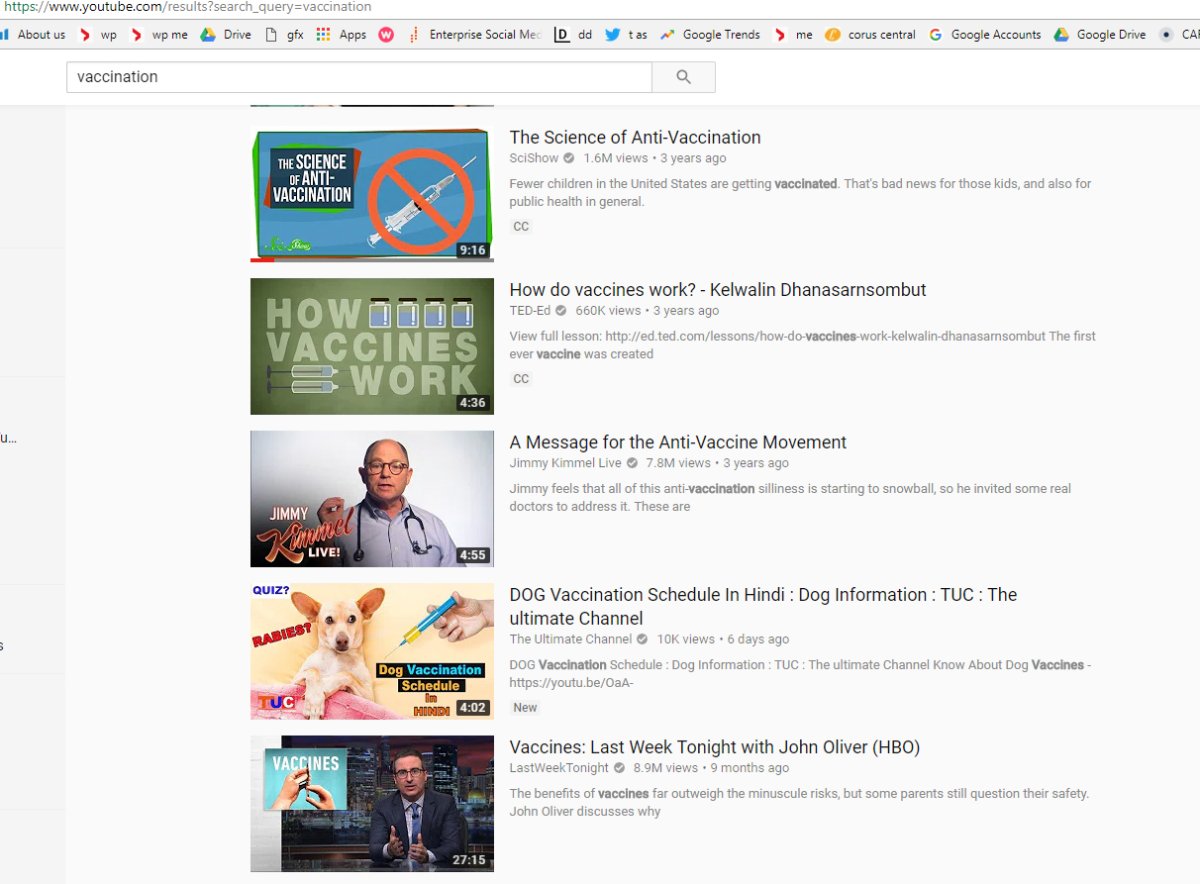

With that precedent, we were reassured to be offered videos that stuck with mainstream science’s view that vaccination is safe and effective.

But then we tried to see what YouTube’s machine learning algorithm, which is designed to figure out what you want and give you more of it for advertising purposes …

… would do if we split personalities.

Our first account clicked on a pro-vax video, which taught YouTube to offer us more:

Our second account, curious about why vaccination would ‘destroy natural herd immunity’ (isn’t it the opposite?) clicked on a video which turned out to be by Andrew Wakefield, an ex-British doctor whose licence to practice medicine was taken away in 2010.

(Wakefield’s licence was pulled for a number of reasons, one being that the board found ethical problems with a paper he published claiming that vaccination caused autism. The board also took issue with him over an incident in which he paid children at his son’s birthday party £5 each for blood samples.)

Notice how different the suggested videos to the right of the main screen immediately become. YouTube has set our second account up to basically binge-watch Andrew Wakefield. (Wakefield can call himself Dr. Andrew Wakefield because he holds a medical degree.)

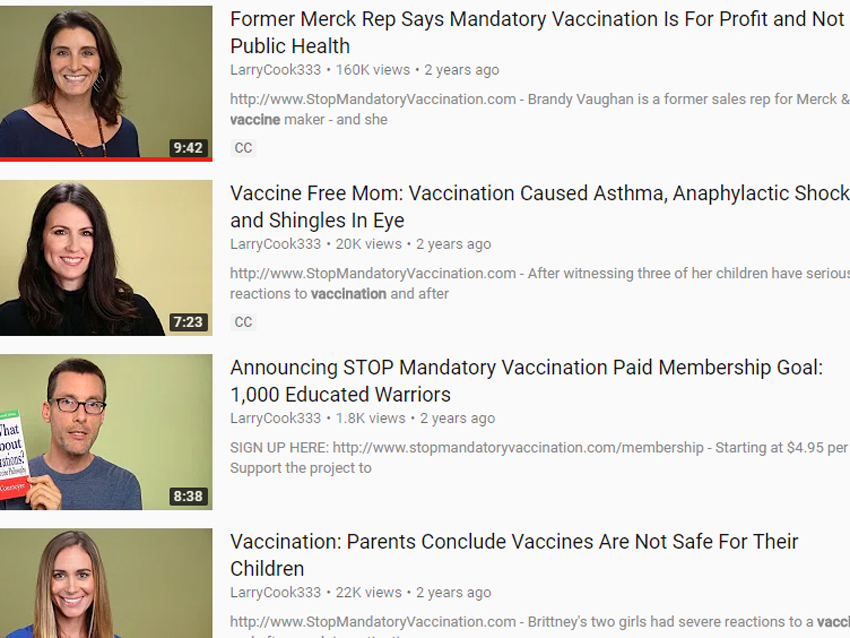

Who is hosting Wakefield’s videos, anyway? We had a look at the account, @LarryCook333 (it’s basically Anti-Vax Central) and in the process clicked on some.

Once I re-emerged to YouTube’s main page and searched for ‘vaccination’ again, I found that I’d successfully trapped my account in an anti-vax filter bubble. YouTube had figured out what to offer me, and vaccination videos based on mainstream science get harder and harder to find:

WATCH: As the myths and misconceptions about vaccine safety continue to grow, so does the impact these unfounded beliefs have on public health. Timothy Caulfield, research chair in health law and policy at the University of Alberta, tackles the frustrating topic in his latest book “The Vaccination Picture.”

“It is possible that YouTube’s recommender algorithm has a bias toward inflammatory content,” Zeynep Tufekci wrote recently.

“What we are witnessing is the computational exploitation of a natural human desire: to look ‘behind the curtain,’ to dig deeper into something that engages us. As we click and click, we are carried along by the exciting sensation of uncovering more secrets and deeper truths. YouTube leads viewers down a rabbit hole of extremism, while Google racks up the ad sales.”

In fake news news:

- Voter targeting of the kind that Cambridge Analytica engages in will soon seem quite primitive, Justin Hendrix and David Carroll warn in Technology Review. “The future of our democracy requires us to imagine these technologies as potential threats and to recognize that unfettered innovation in social media has had its heyday.”

- Grindr was sharing users’ self-declared HIV status with third-party companies until Vox reported on it this week.

- The Atlantic Council’s Digital Forensic Research Lab offers some hints on how to spot Russian troll accounts on Twitter. Among them: Russian has no articles (in English, ‘a’ or ‘the’) so Russian speakers will often mix them up or omit them, as in this example. Oddly phrased questions are another clue. DFR also recommends searching backward in an account for a history of parroting Kremlin talking points which have little traction in North America, like narratives about Ukraine, Crimea or the 2015 murder of opposition leader Boris Nemtsov.

- Not sure quite where Shakespeare wrote this, despite this video’s 24,259,186 views on Facebook. (You can search the Bard’s complete works, from Sonnet 1 to Venus and Adonis, here. If you find it, let us know in the comments.)

Comments