People in Florida and the Caribbean who are fleeing Hurricane Irma, battening down the hatches or cleaning up the aftermath have a lot to deal with.

So it’s just as well that flying sharks dropping from the sky aren’t among their problems.

A fake screenshot circulating online implies otherwise, though.

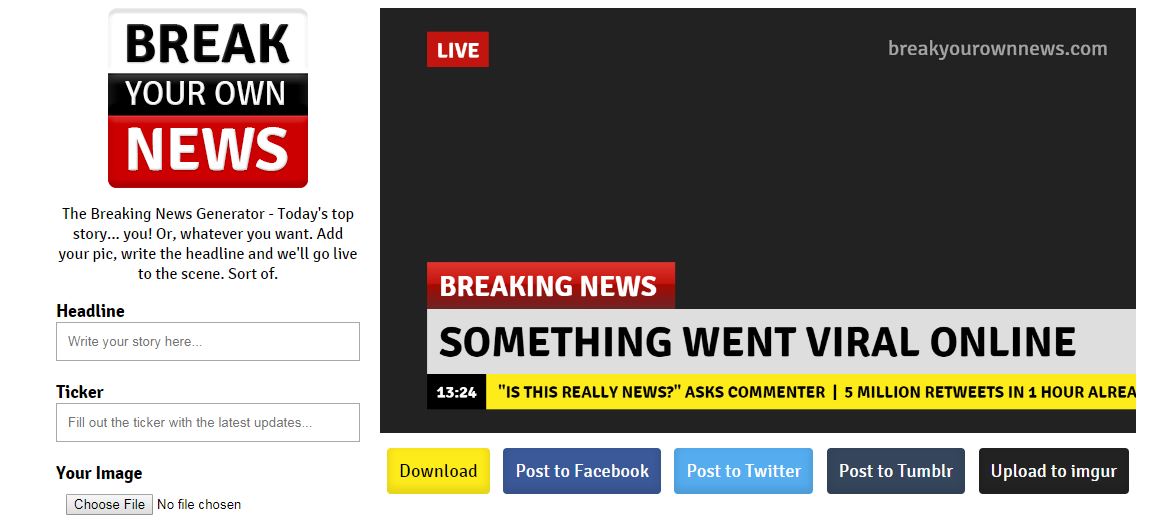

Snopes flags how the image was likely created: through breakyourownnews.com, a site that lets users make fake cable news-style screenshots over an image they can upload:

The original poster didn’t take out the breakyourownnews.com logo on the image, though rendered as white-on-light-grey it’s hard to see. Some Photoshop skills could make it disappear completely.

Get daily National news

Last week we rounded up Hurricane Harvey-related fake news, this week it’s Hurricane Irma. Irma fakes fall into similar categories:

- Repurposed (“fake” in the sense that it happened, but at a different place and time) still images and video.

- Assertions that Irma is a “Category 6” hurricane (there is no such thing)

- Assertions that your valuables will be safe in the dishwasher (they won’t); Buzzfeed reporter Jane Lytvnenko has a wonderfully deadpan Twitter exchange with Maytag here. “We can only recommend the use of your dishwasher as outlined in the user manual supplied with your dishwasher,” @MaytagCare concludes.

- Potentially dangerous fakes, such as fake hurricane maps, a rumour that the walkie-talkie app Zello will work without an internet connection, which Zello calls “massive misinformation,” and that an (imaginary) U.S. federal law requires hotels to take in pets during a disaster.

Buzzfeed, CNN, Newsweek and the Atlanta Journal-Constitution all have Irma-related fake news roundups.

In fake news news:

- Facebook revealed on Thursday that a Russian troll farm had bought US$100,000 worth of political ads in the period of the 2016 U.S. election. Political advertising on Facebook is potentially game-changing in two ways: 1) ads can be efficiently micro-targeted to narrow demographic slices and geographical areas, and 2) the ads themselves are very hard to monitor.

- @BrendanNyhan links to two Twitter threads by political scientists, one questioning whether Facebook ads change voter behaviour, particularly, and another pointing out that it’s important to learn how and at whom the ads were targeted. (Facebook hasn’t released this information.)

- The Daily Beast points out that Russian-funded election ads might have been seen by 28 per cent of American adults, despite the fact that $100,000 is a tiny fraction of the money spent on a U.S. national campaign. “It turns out $100,000 on Facebook can go a surprisingly long way, if it’s used right … The exact reach of Russia’s campaign remains a mystery that only Facebook can solve.”

- A U.S. senator argues that social media-based political advertising should have to meet the same disclosure requirements that traditional broadcast election ads do, Buzzfeed explains. “I think it is unlikely that we will ever have as much knowledge about the content of advertising as we had previously,” one political scientist laments. “This poses challenges for researchers but it also poses challenges for accountability in democracy.”

- Radio Free Europe sums up what we know about St. Petersburg-based Internet Research Agency, identified as the source of the ads, which it calls ” … a hard-charging operation that relies on a combination of methods — from generating fake news, disinformation, and memes to building up networks of fake social-media accounts run by ‘troll’ employees.” The New York Times had a deep dive into the Internet Research Agency in 2015, back when we put “troll farm” in quotation marks. Long read, worth your time.

- The Times profiles, if that’s the word, some sock puppet U.S. voters who were actually invented in Russia and unleashed on Facebook to abuse Hillary Clinton. (It’s hard to avoid the impression, though, that the trolls’ technical skill outruns their English fluency or sense of North American culture. “The Russian efforts were sometimes crude or off-key, with a trial-and-error feel,” the Times points out. One wrote a Facebook post that asked “Hey truth seekers! Who can tell me who are #DCLeaks?” Another said that documents she was referring to “describe eventual means and plans of supporting opposition movements, groups or individuals in various countries.”)

- The Times reviews Kurt Andersen’s Fantasyland: How America Went Haywire: A 500-Year History, in which he argues that in the United States “… an initial devotion to religious and intellectual freedom … has over the centuries morphed into a fierce entitlement to custom-made reality. Your right to believe in angels and your neighbor’s right to believe in U.F.O.s and Rachel Dolezal’s right to believe she is black lead naturally to our president’s right to insist that his crowds were bigger.”

- Bloomberg points out that bots linked to Russia are already laying the groundwork for influence operations connected to the 2018 U.S. midterm elections. “Analysts say Russia’s longer-term goal is less focused on Trump than on helping disrupt or undermine U.S. democratic institutions — an effort that has been underway for decades but which now has a more technological edge.”

- The Guardian looks at an elaborate hoax based on forged documents, started in 2013 but apparently unkillable, alleging a Muslim plot to take over schools in Birmingham. (The real story, being real life, is more complex and interesting.)

- Russia’s endless experimenting with social media as a platform for disinformation sucks up most of the attention in the room, but a Harvard study written up in War on the Rocks suggests we should keep at least half an eye on China, which astroturfs online discussions on an ambitious scale. ” … the government controls the narrative by flooding social media with biased, pro-government information, distracting from real events while minimizing reliance on other forms of censorship … astroturfing moves the discussion away from unpopular or controversial topics in favor of a more popular, self-aggrandizing narrative.”

- The Atlantic Council’s Digital Forensic Research Lab offers 12 ways to spot a Twitter bot. They look for: heavy and endless tweeting (one account they spotted had tweeted over 200,000 times though being less than a year old), a pattern of retweeting with no original posts, accounts that almost never post but are retweeted tens of thousands of times when they do, not having an avatar and having followers who don’t have avatars either, scrambled alphanumerical characters for a handle, a weird mix of languages, matching numbers of likes and retweets (the bot will be programmed to both like and retweet) and … a lack of effort generally:

- And there’s a help-wanted ad on Upwork: “Writer Needed for Republican Oriented (USA) Political Website – Macedonia Based,” who will spend their time “rewriting provided stories.” Pays €500 a month.

Comments