Recent studies indicate that the face recognition technology used in consumer devices can discriminate based on gender and race.

A new study out of the M.I.T Media lab indicates that when certain face recognition products are shown photos of a white man, the software can correctly guess the gender of the person 99 per cent of the time. However, the study found that for subjects with darker skin, the software made more than 35 per cent more mistakes.

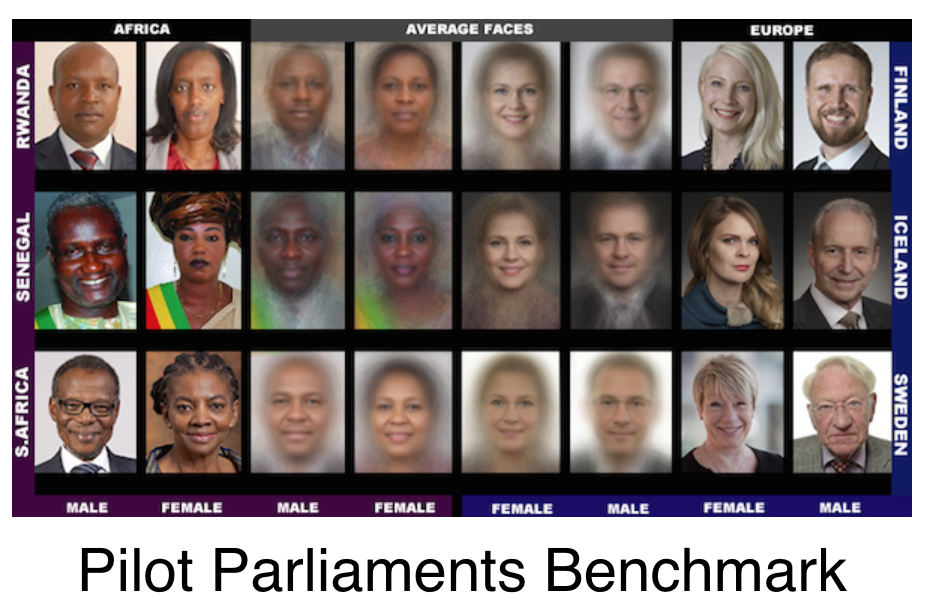

As part of the Gender Shades project 1,270 photos were chosen of individuals from three African countries and three European countries and were evaluated with artificial intelligence (AI) products from IBM, Microsoft and Face++-. The photos were classified further by gender and by skin colour before testing them on these products.

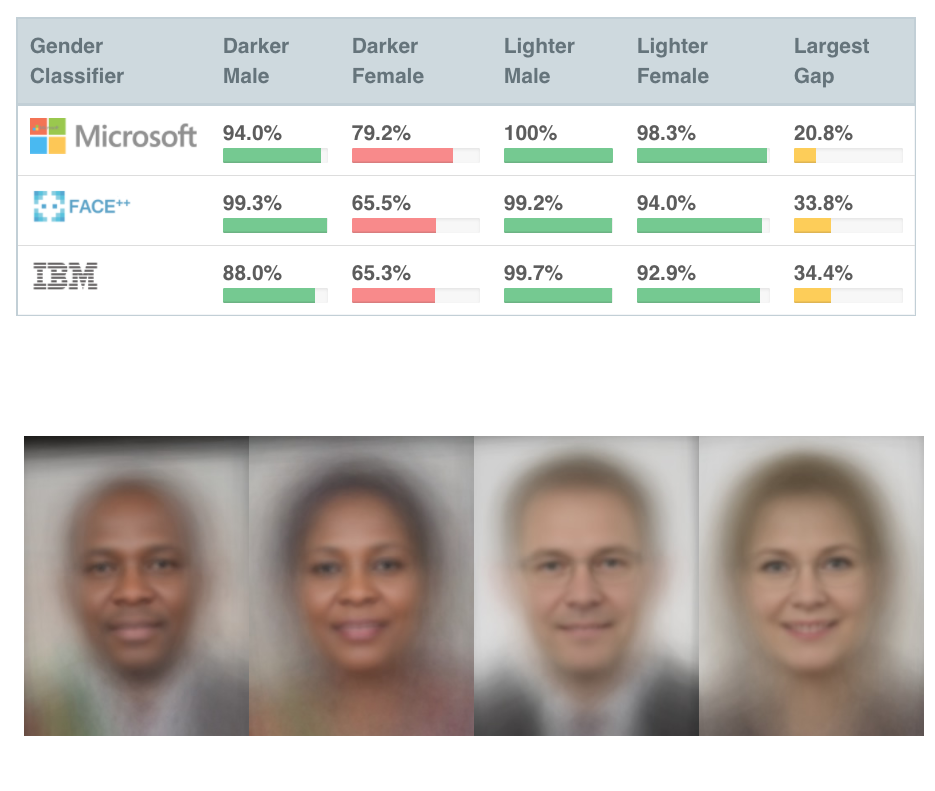

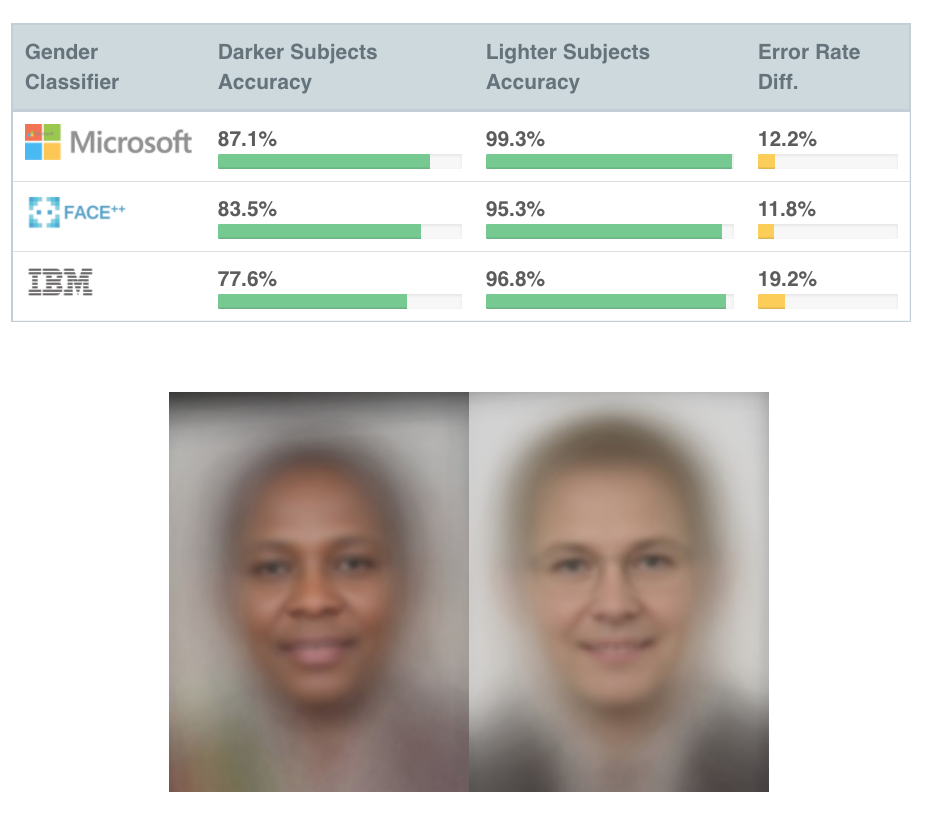

The study notes that while each company appears to have a relatively high rate of accuracy overall, of between 87 and 94 per cent, there were noticeable differences in the misidentified images in different groups.

For example, all companies’ products performed better on photos of men, which yielded an error rate of 8.1 per cent, than they did on photos of women, which yielded an error rate of 20.6 per cent. In addition, every company’s products made fewer errors when attempting to identify lighter-skinned subjects as opposed to darker-skinned subjects, with error rates ranging between 11 per cent and 20 per cent.

Products that use facial recognition and AI software include several consumer smartphones, photography apps such as Google Photos, public sector interfaces in law enforcement and health care, etc.

Get daily National news

WATCH: Artificial intelligence social media surveillance pilot program launched

There’s been a lot of speculation about whether human biases can be transferred to AI software. The majority of AI software is built on machine learning technology, which relies on data.

Joy Buolamwini, the lead author of the study, gave a TED Talk recently to discuss why this research is significant.

“This kind of technology is being built on machine-learning techniques. And machine-learning techniques are based on data. So if you have biased data in the input and it’s not addressed, you’re going to have biased outcomes,” said Buolamwini in an interview.

WATCH: Collaborative artificial intelligence industry will benefit ‘civilization’: Trudeau

In the research paper released alongside the findings of the study, Buolamwini and her co-author Microsoft’s Timnit Gebru write that society is beginning to rely on artificial intelligence for more important functions. Some of these include hiring or firing decisions, loan approvals, or how long individuals spend in prison after committing a crime.

The study uses several examples to illustrate the point. For example, should biased samples be used in AI-based healthcare solutions, this may result in treatments that don’t work for certain portions of the population.

WATCH: Musk issues warning on artificial intelligence; call for regulations

A second example can be seen in the use of facial recognition by law enforcement. A Georgetown study states that at least 117 million Americans are included in police facial recognition networks. A research investigation in 2016 across 100 police departments revealed that African Americans are more likely to be stopped by police and be subject to face recognition searches than individuals of other ethnicities.

“Some face recognition systems have been shown to misidentify people of color, women, and young people at high rates. Monitoring phenotypic and demographic accuracy of these systems as well as their use is necessary to protect citizens’ rights and keep vendors and law enforcement accountable to the public,” read the study.

A few years ago, Google was forced to apologize for a flaw in its AI-driven photos app Google Photos which mistakenly labelled African American people as “gorillas.” While the company promptly apologized and promised to take “immediate action to prevent this type of result from appearing,” it raised questions about the quality of the datasets driving the algorithm.

Both IBM and Microsoft responded to the findings of the Gender Shades project, pledging to make data ethics a priority in their development of AI products.

“IBM is deeply committed to delivering services that are unbiased, explainable, value aligned, and transparent. To deal with possible sources of bias, we have several ongoing projects to address dataset bias in facial analysis – including not only gender and skin type, but also bias related to age groups, different ethnicities, and factors such as pose, illumination, resolution, expression, and decoration,” said IBM researchers in its response.

The company stated that it’s currently in the process of developing a million-scale dataset of face images to balance data from multiple countries and reduce sample selection bias. Microsoft also released a short response, promising to improve the accuracy of its facial recognition products.

“We believe the fairness of AI technologies is a critical issue for the industry and one that Microsoft takes very seriously. We’ve already taken steps to improve the accuracy of our facial recognition technology and we’re continuing to invest in research to recognize, understand and remove bias,” said the statement.

Comments

Want to discuss? Please read our Commenting Policy first.