An American Civil Liberties Union investigation recently revealed that Amazon is selling facial-recognition software to law enforcement, and civil liberties groups are up in arms about the technology’s potential threats to privacy.

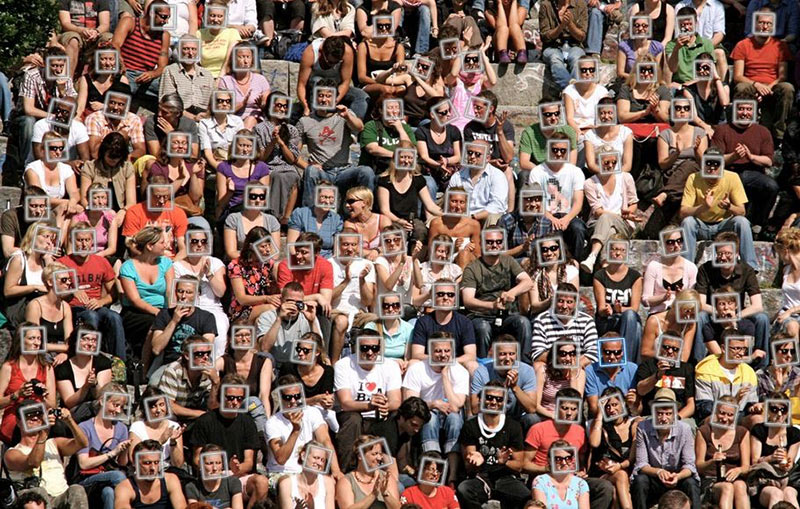

The service is called Rekognition, and uses artificial intelligence to identify objects, people and scenes from video and images. In emails sent between Amazon employees, which were released Tuesday by the ACLU, Amazon claims its product can search against databases holding millions of faces and can identify up to 100 people in a single image.

WATCH: Chinese cities use water spray, facial recognition technology to stop jaywalkers

Furthermore, the e-commerce giant claims the service can use streamed, real-time video of crowds and public places to track people, and has proposed that the software may be useful for police body cameras and other forms of surveillance.

“That gives the government a really far reach into people’s lives,” explained Shankar Narayan, technology and liberty project director with the ACLU. “We already know about the challenges that facial-recognition technologies have.”

He explained that in addition to wide-ranging privacy concerns posed by the implementation of this technology, flawed databases make facial-recognition solutions for law enforcement all the more dangerous for marginalized groups in society.

WATCH: Fredericton councillors raise concerns about effectiveness of police body cameras

Washington County, one of the municipalities which has moved to deploy the software, built a mobile app that its deputies can use to scan any image through the country’s database of 300,000 faces.

Get breaking National news

Narayan explained that people of colour and other minorities are disproportionately represented in criminal databases across the United States, meaning that “you’re more likely to impact the people that are already in them.”

In a statement released by the ACLU, Malkia Cyril, executive director of the Center for Media Justice, stated that, “We already know that facial-recognition algorithms discriminate against black faces, and are being used to violate the human rights of immigrants.”

The emails secured by the ACLU through a freedom-of-information request revealed that law enforcement agencies in California, Arizona and multiple domestic organizations have indicated interest in Rekognition, and Orlando, Fla., has already used the software to search for people in footage drawn from the city’s surveillance cameras.

“Rekognition marketing materials read like a user manual for authoritarian surveillance,” said Nicole Ozer, technology and civil liberties director for the ACLU of California.

“Once a dangerous surveillance system like this is turned against the public, the harm can’t be undone. Particularly in the current political climate, we need to stop supercharged surveillance before it is used to track protesters, target immigrants and spy on entire neighborhoods. We’re blowing the whistle before it’s too late,” she said in a statement.

It’s important to note that law-enforcement agencies are already using facial-recognition technologies, and Amazon’s products aren’t substantially different. However, Clare Garvie, an associate at the Center on Privacy and Technology at Georgetown University Law Center told the Associated Press that Amazon’s vast reach and interest in selling to more police departments at extremely low costs, are troubling.

“This raises very real questions about the ability to remain anonymous in public spaces,” Garvie said.

While police might be able to videotape public demonstrations, face recognition is not merely an extension of photography but a biometric measurement — more akin to police walking through a demonstration and demanding identification from everyone there, she said.

The ACLU, along with about 40 civil liberties groups sent a signed letter petitioning Amazon CEO Jeff Bezos to stop selling its Rekognition products to law enforcement, a refrain from the business of selling surveillance systems to the government for the sake of maintaining consumer trust.

WATCH: Is Artificial Intelligence really more dangerous than a nuke?

“Amazon stop powering a government surveillance infrastructure that poses a grave threat to customers and communities across the country,” read the letter, which was signed by several groups including AI Now, Color for Change, OneAmerica, Student Immigrant Movement.

Narayan went on to explain that there are examples of hyper-surveillance in the world today, and singles out China as a prime example. This past April, facial-recognition technology caught Hong Kong pop star and fugitive Jacky Cheung — who’s wanted for economic crimes — by identifying him in a crowd of over 50,000 people.

In addition, Hangzhou No. 11 High School in China is using facial-recognition technology to scan students every 30 seconds to record their facial expressions — categorizing them as happy, angry, fearful, confused or upset as part of behavioral analysis.

WATCH: Artificial intelligence social media surveillance pilot program launched

Narayan warns that Western countries have the opportunity to stop the progression of these surveillance tactics before they get out of hand.

“What we’re really trying to do is flip the conversation. Right now, the technology is created in a black box and it undermines our civil liberties. We still have a chance to avoid becoming China,” he said.

Google came under fire earlier this year when its involvement in Project Maven came to light, a widely-contested decision to provide artificial-intelligence services to a military pilot program known as Project Maven, which would speed up the analysis of drone footage by classifying images of objects and people. A dozen Google employees resigned last week in protest of the company’s involvement in a project perceived to erode user trust.

Narayan explained that tech companies like Amazon and Google have a responsibility to engage with the public on the deployment of programs like these to ensure that civil rights aren’t being infringed on in the process.

“In the case of Amazon, it really seems like they treated this like another developer project,” he said.

“The responsibility of tech companies goes far beyond that.”

-With a file from the Associated Press.

Comments

Want to discuss? Please read our Commenting Policy first.