In the aftermath of any kind of newsworthy tragedy, there’s a sorry parade of opportunists eager to make up alternate realities about it, often before there’s reliable real information.

On Monday, we looked at the case of Geary Danley, an Arkansas grandfather who was accused by right-wing social media of being the Las Vegas shooter. Why? As far as anyone could tell, because he shared a last name with the real killer’s housemate and liked various liberal causes on Facebook.

Behind a meme of this kind is a glimpse into a culture whose first instinct, on learning of a mass tragedy of this kind, is to find a way of ‘winning’ it as an exercise in ideological point-scoring.

Snopes and BuzzFeed have a wide-ranging tour of conspiracy theories and inventions of one sort or another about the massacre, and Alex Jones’s YouTube channel has, to date, no fewer than 141 videos about Las Vegas, several with over 100,000 and one with over a million.

A brief tour: the shootings were connected to ISIS, or perhaps Antifa; it was an attack on conservatives; there were many more gunmen; there’s definitely a coverup; the attack is a ‘false flag‘ (Jones has reliably called every attack, massacre or tragedy going back to 9/11 a ‘false flag’.) If you take the time to classify them, there are probably half a dozen separate themes, each repeated multiple times.

There’s not much we’re ever going to be able to do about that particular end of the fever swamp.

Earlier this week, though, serious questions were raised about the unwitting role that mainstream social media platforms play in amplifying messages like this. Often, it seems, algorithms act instantly, creating crises that well-meaning humans sort out hours later when they get to work and the complaints start rolling in.

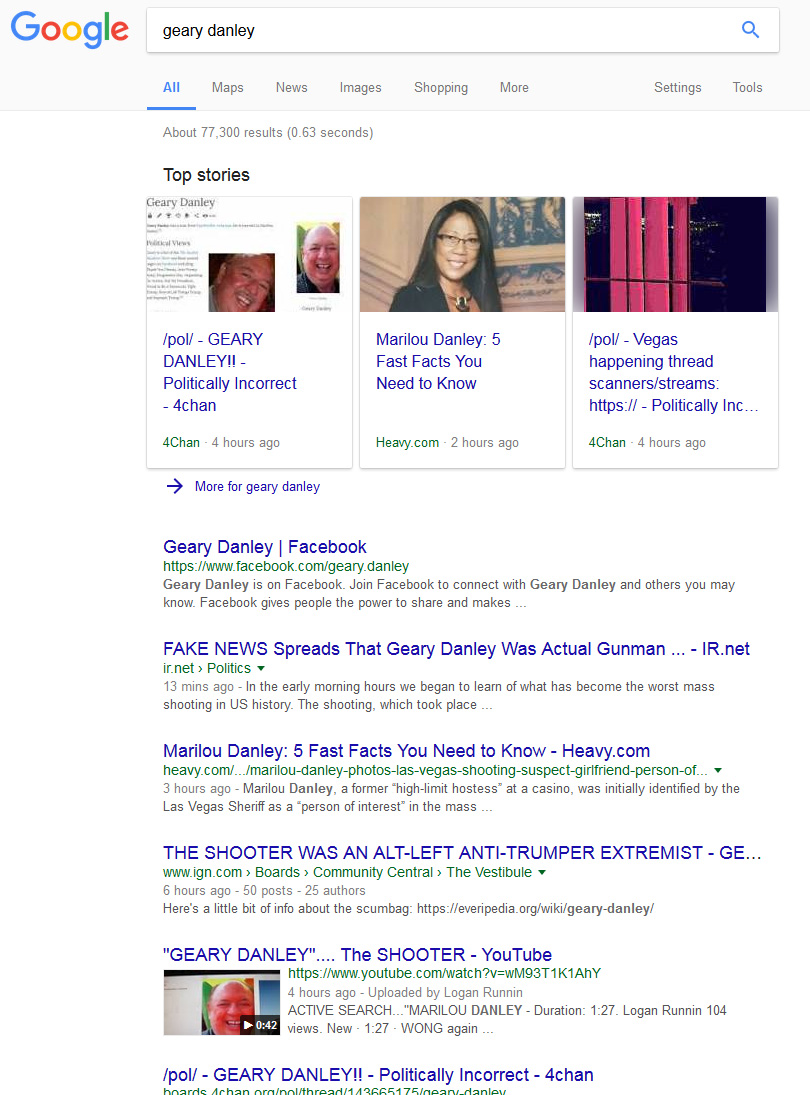

Here’s what a Google search for Geary Danley looked like on Monday morning in working hours, EDT. Bear in mind that Danley’s name was well-enough known at this point that reporters were Googling it:

Is Danley guilty of the worst mass murder in U.S. history? Or is he falsely accused? For much of Monday, Google offered a jumble of possible facts; take your pick. In the top stories slot at the top of the page, where they get a bit of implied legitimacy, are threads in 4chan, a discussion board which has been a toxic centre of alt-right troll culture.

Get breaking National news

Google fixed the problem by mid-day in Ontario, on a time scale that looked very much like the start of the business day in California. But the question remains why the top story feature was allowed to draw from 4chan, of all places, in the first place. Here’s what the company had to say:

Facebook had its own woes.

The platform’s ‘safety check’ page for the Las Vegas shootings was promoting a variety of fake news sites, including a site called Alt-Right News, ‘funny video‘ links and a scam involving Bitcoin before the grown-ups woke up and fixed the problem.

Bear in mind that this is the site that’s presented to people who are trying to figure out whether family members are alive or dead.

YouTube fared no better — videos calling the shooting a hoax surfaced at the top of search results, prompting bitter criticism from survivors. (The Guardian reported that the video platform had changed its algorithms, but on Friday, three of the top six YouTube results for a search for ‘las vegas shooting‘ were videos peddling conspiracy theories. One was from Jones, one from a sort of low-rent imitator, and the third was selling ‘the cleanest superfoods in the world‘.)

In all cases, the problem is an unavoidable result of trying to automate the human process of judging how, when and whether to present information to other humans, which we might as well call editing. Editing is expensive, and not part of the business plan; algorithms are cheap, and are part of the business plan.

Platforms like Google and Facebook try to automate the judgment that would once have been exercised by the editorial departments and for that matter ad departments, of traditional media companies.

But algorithms turn out to be easy to manipulate if you know what you’re doing, and the result, in a crisis, is humiliation — and humans having to be paid to intervene anyway.

In fake news news:

- The Catalan government’s attempt to have a referendum last Sunday about seceding from Spain may or may not have been illegal; the Spanish government’s response to it was certainly tone-deaf and brutal. Poynter has a tour of fake news stories tending to favour both sides, some depicting police brutality that didn’t happen (though there was enough real material to work with) and others attacks on police that didn’t happen either. As is often the case, a reverse image search debunked a lot of frauds.

- Russian online propaganda operators seem not to have made a strong effort on Catalonia, the Digital Forensic Research Lab concludes: “This approach differs from the far more systematically hostile coverage which the Kremlin’s outlets devote to issues which are more central to Russian interests, such as the conflicts in Ukraine and Syria, and Russia’s relationship with NATO. This may reflect a desire not to antagonize Madrid with too blatant a bias, or a simple lack of a unifying narrative between the different elements of the network.” (DFR’s analysis runs counter to a story in El Pais, a Madrid daily, which asserted that ” … the network of fake-news producers that Russia has employed to weaken the United States and the European Union is now operating at full speed on Catalonia.”

- Poynter also explains why fighting fake news is much harder on closed networks like WhatsApp than on Facebook, let alone Twitter. “WhatsApp was designed to keep people’s information secure and private, so no one is able to access the contents of people’s messages,” the company says. “We recognize that there is a false news challenge.”

- A NSA contractor unwisely took home documents showing U.S. plans for defending against a cyberattack, and copied them onto his own computer. Even more unwisely, he was running Russian-made anti-virus software. Now, Russian hackers have the documents, the Wall Street Journal reports.

- Russia is targeting smartphones carried by NATO troops in the Baltic states, the WSJ reports. They talk to an American colonel who realized that hackers were trying to geolocate him through his personal iPhone. In this case, the hackers seem to have been passively collecting information about troop movements, but in Ukraine, soldiers have received propaganda text messages apparently from Russian sources. (The methods are new, but the messages themselves, playing on soldiers’ fear and suspicion of civilian leaders, are much older.)

- Facebook cut mentions of Russia from a report back in April on how the platform was used for ‘influence operations’ during the 2016 U.S. election based on legal advice, the WSJ reports. (In an awkward construction, Facebook did admit that its April investigation “does not contradict” a U.S. intelligence report that did name Russia. “The debated inclusion of Russia in the April report raises new questions about when Facebook became aware of Russian manipulation of its platform during the election,” the story notes.

- BuzzFeed has a deeply researched long read, worth your time, on the depth of the links between Breitbart, Milo Yiannopoulos and explicit neo-Nazis. Breitbart, of course, was run before mid-2016, and is once again, run by Steve Bannon, who took a break to be Donald Trump’s campaign manager and later White House chief strategist. Breitbart ” … dredges up the resentments of people around the world, sifts through these grievances for ideas and content, and propels them from the unsavory parts of the internet up to TrumpWorld, collecting advertisers’ checks all along the way,” Joseph Bernstein writes.

- The Texas Observer explains Texas GOP consultant Vincent Harris’s transition to consulting for the far-right German AfD: “The goal of the AfD’s very successful digital campaign, run with Harris’ help, was “to make people less shy about identifying” with the party — in other words, to help break down German societal taboos against association with the far right.‘”

- Russian operators set up a series of accounts during the 2016 U.S. election designed to find out which individual U.S. voters were susceptible to propaganda, then targeted them personally with powerful advertising tools, the Washington Post reported Monday.

- U.S. congressional investigators are taking an aggressive interest in how Google and Facebook were used to spread misinformation and propaganda during the election. Politico points out that the companies affected — which closely guard secrets about algorithms and search rankings — potentially have a lot to lose. “Technology companies have traditionally balked at the idea that they should have to lift their hoods to lawmakers or regulators. For one thing, the algorithms in some cases constitute the bulk of their assets.”

- Facebook is trying to have it both ways, and can’t, argues Alexis Madrigal in the Atlantic: “Mark Zuckerberg wants his company’s role in the election to be seen like this: Facebook had a huge effect on voting — and no impact on votes.” News Feed favours engagement, noisy, populist candidates are better at driving engagement, therefore Facebook structurally favours populist candidates and cannot be neutral.

- Google has launched an internal investigation on whether, or to what extent, it played an unwitting part in Russian attempts to influence the U.S. election. (Google owns YouTube, which is a hot mess of fake news; also, Twitter traffic which is easily manipulated with bots, has a role in creating Google search rankings.)

- Fake news is a squalid business, but it shouldn’t rob us of the pleasure of a really good hoax, like these ones:

Comments